To get a few questions out of the way:

What is Terraform?

Terraform is an open-source infrastructure as code software tool created by HashiCorp. Users define and provision data center infrastructure using a declarative configuration language known as HashiCorp Configuration Language, or optionally JSON. Terraform manages external resources with “providers”.

Wikipedia – https://en.wikipedia.org/wiki/Terraform_(software)

So why?

It is great to have all your infrastructure configured with some kind of code. I’ve worked with AWS SAM and Cloudformation but was never happy as this would only allow me to define AWS Infrastructure.

By using terraform I can also deploy to other platforms like GCM or Azure.

Central state – why that?

This boils down to 2 options:

- if you are the only dev working on it, use the local state of terraform. But keep in mind that you cannot have others update the structure then as terraform needs to know the current state of the system and the import of existing infrastructure is a manual process. (that would need to be repeated every time someone else updates the structure)

- with a central state you can have multiple people updating your infrastructure. by defining IAM roles for AWS you can also restrict them on what can be updated. Terraform will always load the latest state before computing a change set to execute.

We will store the central state in S3 and provide locking with DynamoDB. Locking is required as we could in other cases start updates in parallel, causing inconsistent State.

If you want to read more on Terraform State handling take a look at Yevgeniy Brikman‘s article on Medium: https://blog.gruntwork.io/how-to-manage-terraform-state-28f5697e68fa

Step 1 – required tools

AWS CLI

follow the instructions on https://docs.aws.amazon.com/cli/latest/userguide/install-cliv2.html

Once finished the output of aws --version should be something like

aws-cli/1.16.309

Python/3.7.3 Darwin/19.2.0 botocore/1.13.45

Use the environment variables to configure access to AWS. If you are using profiles then call export AWS_PROFILE=profilename to use it.

Terraform CLI

follow the instructions on https://learn.hashicorp.com/terraform/getting-started/install

Once finished the output of terraform --version should be something like Terraform v0.12.23

Step 2 – IAM Policy for the State

Create a new IAM Policy called terraform-execution to give users access to deploy changes. They will need to have the permission for Terraform as well as for the resources they are supposed to create!

Do not create the S3 Bucket / Dynamo DB

table manually!

use the variables MY_*

MY_S3_STATE_BUCKET -> choose an available one

MY_AWS_ACCOUNT_ID -> replace with yours

MY_DYNAMO_TABLE_NAME -> locking table name

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"dynamodb:UpdateTimeToLive",

"dynamodb:PutItem",

"dynamodb:DeleteItem",

"s3:ListBucket",

"dynamodb:Query",

"dynamodb:UpdateItem",

"dynamodb:DeleteTable",

"dynamodb:CreateTable",

"s3:PutObject",

"s3:GetObject",

"dynamodb:DescribeTable",

"dynamodb:GetItem",

"s3:GetObjectVersion",

"dynamodb:UpdateTable"

],

"Resource": [

"arn:aws:s3:::MY_S3_STATE_BUCKET",

"arn:aws:s3:::MY_S3_STATE_BUCKET/*",

"arn:aws:dynamodb:us-east-1:MY_AWS_ACCOUNT_ID:table/MY_DYNAMO_TABLE_NAME",

"arn:aws:dynamodb:us-east-1:MY_AWS_ACCOUNT_ID:table:table/MY_DYNAMO_TABLE_NAME"

]

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"dynamodb:ListTables",

"s3:HeadBucket"

],

"Resource": "*"

}

]

}Step 3

Create a file main.tf with the following content:

provider "aws" {

version = "~> 2"

region = "us-east-1"

}

resource "aws_s3_bucket" "terraform_state" {

bucket = "MY_S3_STATE_BUCKET"

acl = "private"

# Enable versioning so we can see the full revision history of our state files

versioning {

enabled = true

}

# Enable server-side encryption by default

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

tags = {

Terraform = "true"

}

}

resource "aws_dynamodb_table" "terraform_locks" {

name = "MY_DYNAMO_TABLE_NAME"

billing_mode = "PAY_PER_REQUEST"

hash_key = "LockID"

attribute {

name = "LockID"

type = "S"

}

tags = {

Terraform = "true"

}

}now to install all dependencies for terraform like aws execute terraform init

now to create the S3 bucket and DynamoDB table plan the changes by: terraform plan

and then execute them by terraform apply

It is always a good idea to first call plan, as this will give you an overview of the changes terraform is about to execute. With apply the changes are beeing executed (the delta to the current state could have changed if there are multiple people working on it or you changed something in the account itself!)

cautious dev

Step 4 – enable Central State

At then end of the main.tf file add now the following lines:

terraform {

backend "s3" {

bucket = "MY_S3_STATE_BUCKET"

key = "global/s3/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "MY_DYNAMO_TABLE_NAME"

encrypt = true

}

}with this again call first “plan” and then terraform apply.

Also your current / local state will then be transferred to the central state!

Step 5

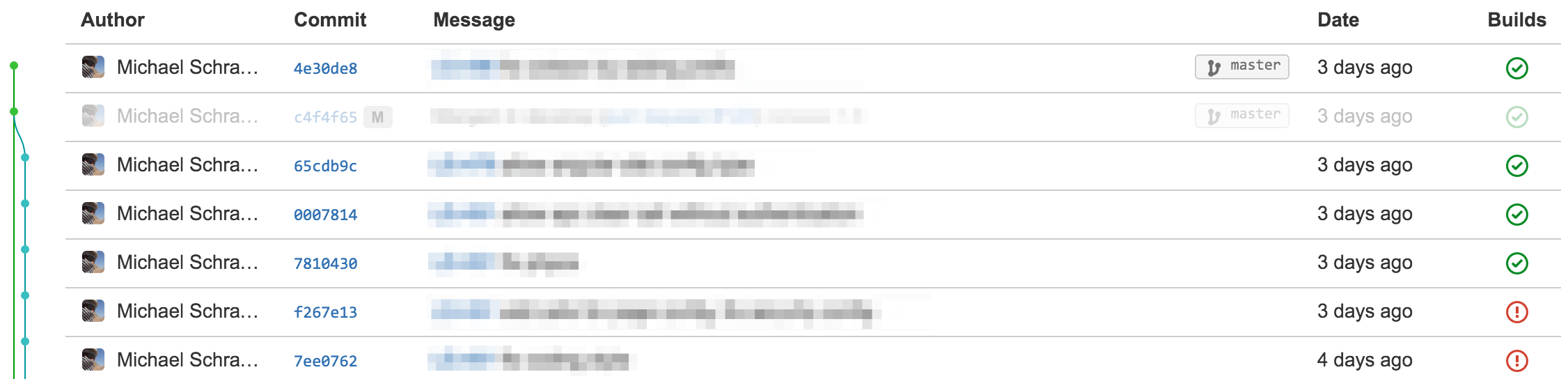

now commit your main.tf file to source control and start collaborating with other devs on your infrastructure!

This also makes it a lot easier to reuse infrastructure across projects!